Adding Robots.txt and Sitemap.xml to a Squarespace site

I work with a few clients who use my services to design and build their squarespace sites while also contracting with "traditional SEO experts". Calling themselves "traditional" sort-of makes me laugh, because the business of SEO is relatively new. Is Search Engine Optimization art, science or smoke & mirrors? I will cover REAL SEO some time in the near future, and will call-upon an expert to interject. But I digress...

THE BACKSTORY

As one particular SEO professional was quite used to working on WordPress sites, she knew the steps to modify the Robots.txt and Sitemap.xml files. The site in question was (allegedly) juiced-up using "black-ops SEO tactics" which completely blocked the site from indexing on Google. Yes, Google blacklisted them— a bad thing to have happen to any site.

The up-standing expert convinced me that— even though Squarespace says it isn't necessary— we needed to provide what Google was asking for to rectify the situation.

SO WHAT ARE THESE FILES?

Robots.txt tells which search bots are allowed in the site.

Web Robots (also known as Web Wanderers, Crawlers, or Spiders), are programs that traverse the Web automatically. Search engines such as Google use them to index the web content, spammers use them to scan for email addresses, and they have many other uses. — robotstxt.org

Sitemap.xml is basically an index of the site's URLs.

The Sitemaps protocol allows a webmaster to inform search engines about URLs on a website that are available for crawling. —wikipedia.org/wiki/sitemaps

YOUR CONTENT

YOUR CONTENT

IS WHAT MATTERS MOST!

Let me take another moment to clear my throat and tell it like it is...

It's my personal opinion that adding a sitemap file may certainly help your site today (when the file is installed), or over the course of the next few weeks (when you will see results in Google), but if you're constantly updating your site (as you very-well should be), the sitemap file will also need continual updating.

This is why— when consulting on the phone with a client— I will always recommend that they add a blog or a journal to their site. Those bots want to see fresh, active content and there's no better way to serve-up a plate of hot-steaming content than by using a blog.

Be sure to read the FAQ's at sitemaps.org for more information.

OK, LET'S DO THIS

All of that aside, let's completely blow the mystery away by adding these files to your site. Simply do the following...

1. Log-into the site admin area of your Squarespace site.

2. Browse to FILE STORAGE under the Data & Media heading.

3. Upload your Sitemap.xml file— you can put it anywhere— we're going to map to this file next.

NOTE: This file can be auto-generated from one of hundreds of online resources. Be sure that you trust your auto-generated source and try to read through the file to make sure it's "clean" of errors or spammy links.

Now let's map to our uploaded sitemap.xml file...

NOTE: Bots will want to access this file in the ROOT of your site. In other words, www.domain.com/sitemap.xml. That's why we need to add a 301 redirect to this path.

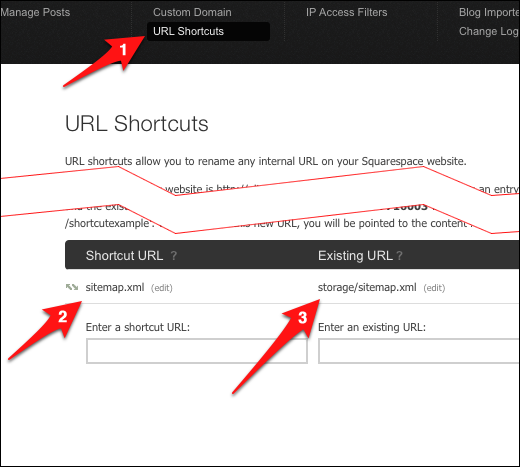

4. Go to URL SHORTCUTS under the Structure heading.

5. Add "sitemap.xml" to the Shortcut URL field, and "storage/stiemap.xml" to the Existing URL field. So, when the path /sitemap.xml is being requested, we've shown "where to go" to complete that path: /storage/sitemap.xml (the file we uploaded)

6. Test the URL in your browser by going to www.domain.com/sitemap.xml. If your file and redirect is in-place, you should see the XML data in your bowser.

That's all you need to do. For the robots.txt file, you will just take the same steps as above.

If you want to add these files to your site, you are certainly more than welcome to do so, but be sure to seek professional help before re-wiring things. And again, rather than stuffing keywords and hacking your site to have a better ranking, focus on your site's content.

Content is King. I'm out.

Articles from Big Picture Web (Squareflair's marketing partner)

Help articles from help.squarespace.com